This post aims to present the outcomes of the 5GASP project in terms of the whole Network Application creation process, not only focusing on the development and deployment per se, but also the inclusion of tests that automatically verify the accurate functioning of the Network Application within the 5G infrastructure.

Specifically, the initial work of the project aimed to lay the conceptual foundations and introduce the envisioned models of an automated CI/CD process throughout a Network Application’s lifecycle. Also, it included a novel onboarding and deployment process as closer to zero-touch as possible, thus simplifying their executions. The outcome of this work is summarized in D3.1.

Following, after a Network Application is onboarded, an automated process takes place in order to perform the required validations. For that purpose, the Network Application is deployed in a restricted and controlled scenario (5GASP), where eventually several infrastructure, functional and developer-customized tests are performed. To do so, specific artifacts are employed, which are responsible for conducting the corresponding verification tasks and gathering the results. Those are then returned to the platform, therefore indicating the feasibility and correctness of the onboarded Network Application. Again, all the above processes are executed automatically when the Network Application is onboarded, thus avoiding the need for performing manual operations. The inclusion of the validation process within the Network Application’s lifecycle can be found in D3.2.

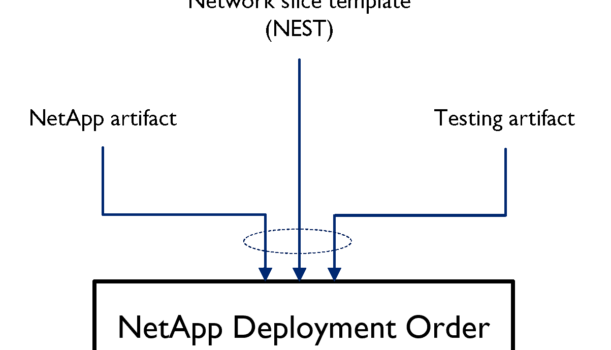

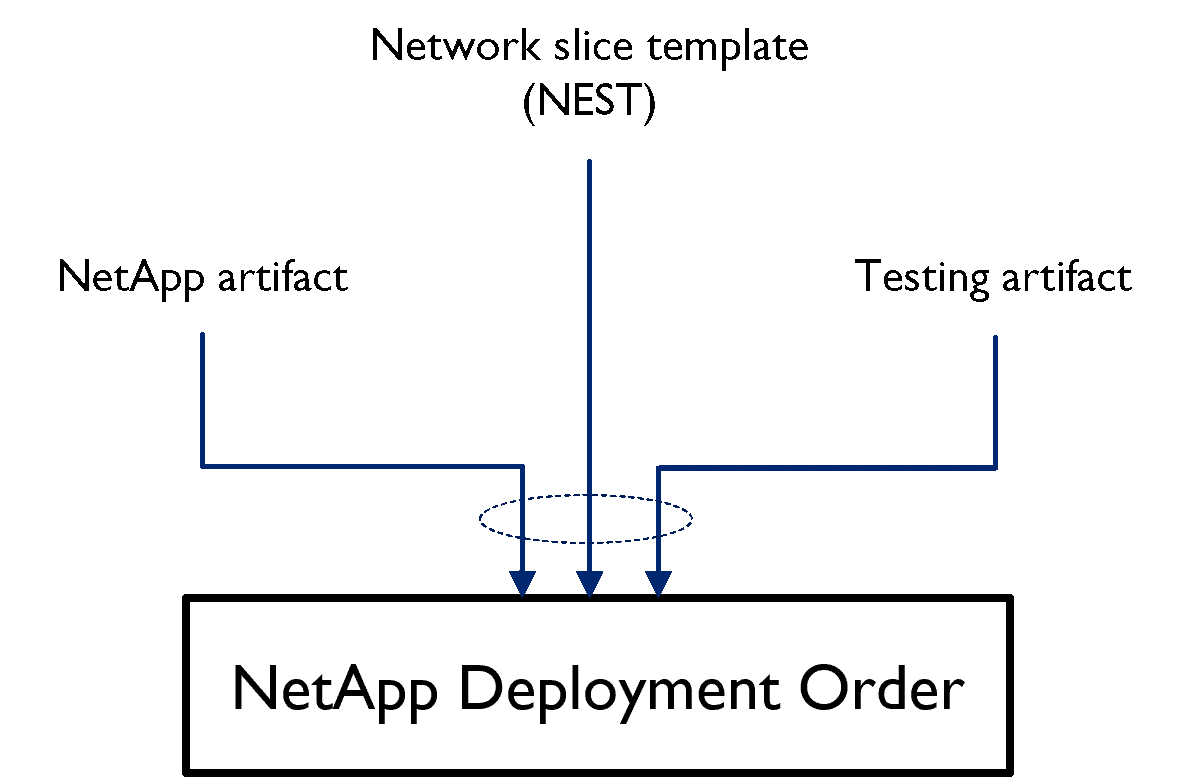

In 5GASP, a Network Application is described as a set of services that fulfill certain use cases, in the context of the 5G System. Hence, an onboarding procedure was introduced with a noticeable focus on maintaining the uniformity of its consequent entities, which are based on widely adopted standards, simultaneously fitting the project’s goals and assuring interoperability with other similar implementations on the same scope. Hereby, the elected supported model to facilitate the described process consists of three discrete entities, i.e. Network Application artifacts, hosting network slice, and testing artifacts, as seen in the figure below (Figure 1).

Shortly, the Network Application artifacts adopt the ETSI NFV model and are mapped to TM Forum’s (TMF) Service Specifications, thus enabling interaction with external Business/Operations Support Systems (BSS/OSS) through the supported Open API families. As the NFV architecture is guided towards a cloud-native approach, 5GASP aims to support seamless transformation for already containerized applications to VNFs via Kubernetes Helm Charts, leveraging standards-introduced deployment schemes.

The network slice templates describe the offered 5G network capabilities that are accommodated by the 5GASP corresponding testbeds. The applicable slice template can either be selected by the developer or it may be appointed automatically, based on the requested Key Performance Indicators (KPIs). The supported network slice templates are based on GSMA’s GST.

Lastly, for the testing phase, 5GASP designed and implemented its own test descriptors in the form of YAML files. The overall testing pipeline is incorporated in the said descriptors, e.g. type of test executed, required deployment parameters, etc. Currently, along with the pre-defined tests that are publicly available, a developer is also able to outline its own test leveraging the established descriptor, while providing the respective test scripts.

Each of the above model entities is expressed with a standardized set (TMF OpenAPIs) of characteristics. To support this implementation, 5GASP provides a user-friendly portal as part of Network Application Onboarding and Deployment Services (NODS), which integrates a Service and Network Orchestrator that coordinates the deployments on the underlying facilities and also an interaction point with 5GASP CI/CD Manager, charged with the execution of the CI/CD pipeline. The 5GASP NODS is based on the open-source project Openslice.

Following this, the deployment process begins as soon as a previously designed service bundle is appointed for fulfillment. This request is captured by a TMF’s Service Order request. The overall process takes place in two layers. At first, the Service Orchestrator receives the unprocessed Service Order and outlines the delivery scheme, followed by the deployment actions delegated in the corresponding testbed’s domain. Specifically, the orchestration process may occur in a single testbed or employ Network Orchestrator to lay an interconnecting network mesh between multiple testbeds.

Once a Network Application is successfully deployed, the consecutive validation process may be initialized. This process is triggered by the NODS, by submitting a TMF Service Test payload to the CI/CD Manager – the entity responsible for coordinating the validation process – which uses this information to generate a validation pipeline dynamically. To gather test artifacts, such as the Testing Descriptor file, the CI/CD Manager will request them from NODS after the triggering TMF payload, which made available URLs from where these resources could be obtained. It is also worth mentioning that the Service Test payload may also include URLs to gather developer-defined tests if the Network Application developer onboarded its own tests.

After rendering the Testing Descriptor and gathering the developer-defined tests, the CI/CD Manager will assemble a validation pipeline. This process relies on the concept of Pipeline as Code since the CI/CD Manager dynamically generates a pipeline configuration file, which will then be submitted to the CI/CD Agents responsible for performing the validation tasks and reside at each testbed. The CI/CD Agents, enabled by Jenkins, offer a straightforward API to submit pipeline configuration files, which heavily simplifies the creation and execution of dynamic validation pipelines.

During the tests’ execution, the CI/CD Agents will assemble their outputs and results. This task is straightforward when using Robot Framework-defined tests, which is the case in 5GASP. When executing Robot Framework tests, Robot transparently creates log and report files, using HyperText Markup Language (HTML). These files enable a simple understanding of the outputs of a test and make it possible for the Network Application developers to explore the results of their tests in detail.

When all tests have been performed, the CI/CD Agent will create a test report composed of all the logs and reports of the executed tests. Then it will forward this report to the CI/CD Manager, that, alongside NODS, is responsible for making it available to the Network Application developers through a Test Results Visualization Dashboard (TRVD).

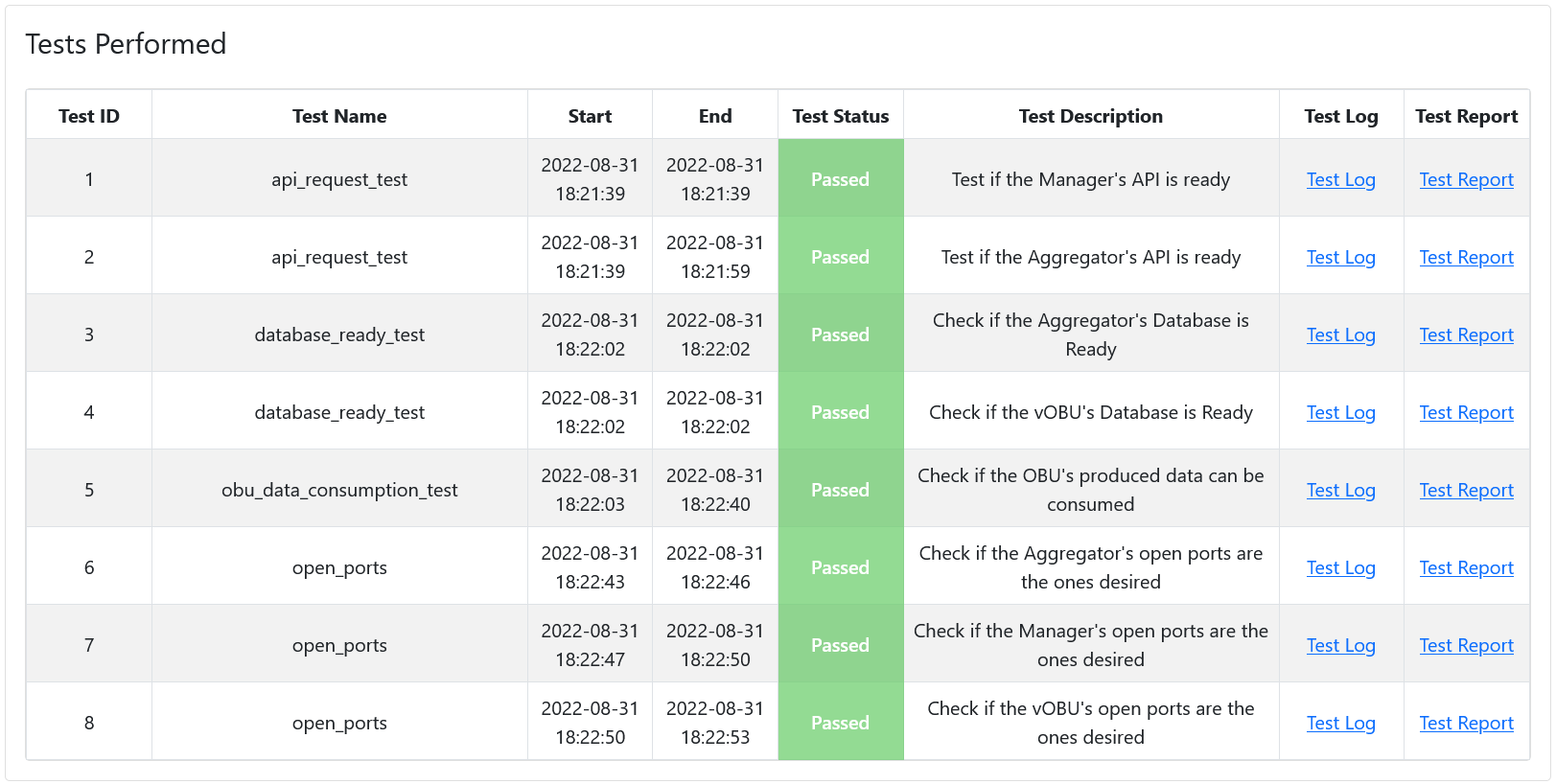

Figure 2 presents a portion of the TRVD’s Graphical User Interface (GUI), from which it is possible to observe how effortless it is for the Network Application developers to get the Robot-generated report and log files.